Maximum Solution Depth

How many solution layers does Dataverse support? Microsoft’s documentation on Solution Layers doesn’t specify a limit. Deploying updates via a higher version of the same solution is better practice, but would using separate managed solutions for every update eventually cause the system to hit a wall, bog down, or worse, collapse?

The Problem

A maximum number of solution layers is not specified. Is it safe to keep adding layers?

The Helpful Bit

This is by no means exhaustive, but I decided to perform a simple test; deploy more solution layers than any developer in their right mind would do. Doing so manually didn’t sound practical or fun, so I created a basic solution, wrote a simple script to redeploy it as a new managed solution repeatedly, and left it to run on a clean environment overnight.

The Script:

##SOLUTION DEPTH CHECK##

##Modifies a given solution and deployes as a new version itteratively to test maximum solution layer depth.

#Set Variables

$SolutionName = "LayerDepthTest"

$SolutionsFolder = ".\Solutions"

$SourceEnvURL = "https://demoenvironment.crm.dynamics.com"

#Retrieve Target Solution

#Authenticate to Source Env

Write-Output "Authenticating to "$SourceEnvURL"..."

pac auth create `

--name "CDSAuth" `

--url $SourceEnvURL

#Publish & Export Solution.

if(Test-Path $SolutionsFolder\$SolutionName.zip)

{

Write-Host Deleting $SolutionName.zip

Remove-Item $SolutionsFolder\$SolutionName.zip

}

pac auth select --Name "CDSAuth"

pac solution publish

pac solution export `

--name $SolutionName `

--path $SolutionsFolder\$SolutionName.zip `

--async `

--managed `

--max-async-wait-time 30

#Unpack Solution.

pac solution unpack `

--zipfile $SolutionsFolder\$SolutionName.zip `

--folder $SolutionsFolder\$SolutionName `

--packagetype 'Managed' `

--allowDelete `

--allowWrite `

--clobber

### BEFORE CONTINUING, DELETE THE SOURCE COMPONENTS FROM TARGET ENV.

### OTHERWISE, WILL GET ERROR CONVERTING COMPONENT FROM UNMANGED TO MANAGED.

#For N++: Update & Repack new version of SLN and Deploy.

$Layer = 0 #Starting Layer

$n = 1000 #Number of layers to add.

Write-Host Adding $n layers, starting at $Layer

for($i=1; $i -le $n; $i++)

{

Clear-Host

$suffix = "{0:000000}" -f $Layer

Write-Host Updating Layer $suffix - $i / $n -ForegroundColor DarkGreen

Get-ChildItem -Path "$SolutionsFolder\$SolutionName\Other\Solution.xml" |

ForEach-Object {

(Get-Content $_.FullName) `

-replace '<UniqueName>(.*)<\/UniqueName>', "<UniqueName>${SolutionName}_$Suffix</UniqueName>" |

Out-File $_.FullName

}

##Repack Solution

Clear-Host

Write-Host Packing Layer $suffix - $i / $n -ForegroundColor DarkGreen

pac solution pack `

--zipfile $SolutionsFolder\$SolutionName"_Managed_"$Suffix".zip" `

--folder $SolutionsFolder\$SolutionName `

--packagetype 'Managed' `

--clobber

##Import Solution & Publish All

Clear-Host

Write-Host Importing Layer $suffix - $i / $n -ForegroundColor DarkGreen

pac auth select --Name "CDSAuth"

pac solution import `

--path $SolutionsFolder\$SolutionName"_Managed_"$Suffix".zip" `

--force-overwrite `

--async `

--max-async-wait-time 60

pac solution publish

Write-Host Layer $suffix Imported. - $i / $n -ForegroundColor DarkGreen

$Layer++

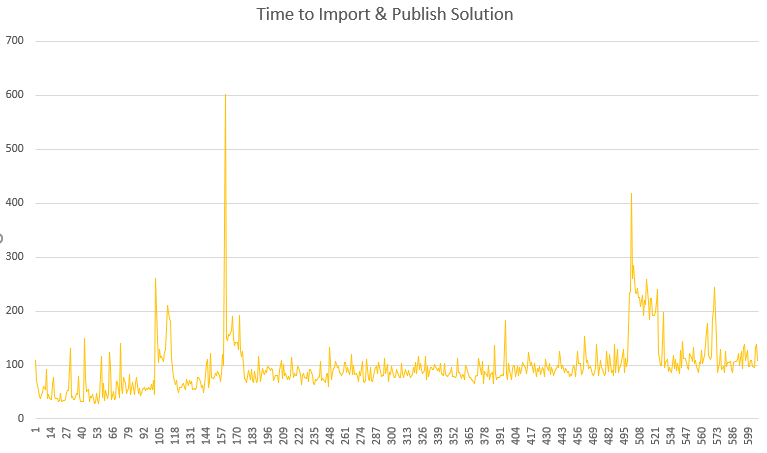

}Results: While I expected each run to take exponentially longer, Powerapps handled 500+ layers surprisingly well. Even after 600 rounds, it was still taking only 2 minutes to deploy and publish each layer.

I will add that the system performance also did not show any sluggishness.

That said, I did NOT spend time creating a large number of components. The script merely saves the same solution with a new unique name and reimports it as a new solution layer to the target environment. A complex solution might not fare so well.

Conclusion

While not best practice, you can rest easy that a high number of solution layers probably isn’t going to grind your environment to a halt.

Resources

- MSFT – Solution Layers:

https://docs.microsoft.com/en-us/powerapps/maker/data-platform/solution-layers